Every product development project includes uncertainty over what will happen. The uncertainty—each assumption or best guess—reduces our chances of project success. The job of the project manager and team members is to ensure success by managing risk.

When something goes wrong—deviates from the plan—it stops being a risk and becomes an issue that must be addressed to ensure success. Issues are those conditions that are having a negative impact on your ability to execute the project plan. You can easily identify them because they directly cause schedule slippage and extra work.

There are two simple tools that can—and should—be used on every project to manage risks and issues to prevent disaster. One is the risk register; the other is the issue log. In my experience, these two documents are often conflated, but they are distinct documents that should contain different information and drive different actions.

The risk register is a means of capturing risks that we want to monitor over the life of the project so that we can take action before they have a negative impact on the project. These are conditions that you have decided not to explicitly work into the plan, but don’t want to let “slip under the radar” to create big issues for you later.

The issue log is where you record any problems that were not accounted for in the plan and that threaten to delay the project, push it off budget or reduce the scope (e.g. reduce product performance).

| Issue Log | Risk Register |

|---|---|

| Description of the issue | Description of the risk |

| Underlying problem or cause of the issue | Risk profile—sources of uncertainty and the potential impact |

| Action plan | Potential actions |

| Priority or scheduling | Monitoring plan |

| Who is responsible for assuring this issue is resolved | Who is responsible for monitoring |

| Date opened and date resolved, sometimes a tracking number or other ID | Date last updated, tracking ID |

Issue Log

The issue log is fundamentally about corrective actions. The project has deviated from the plan, and now we need to get back on course to complete the project on time, on budget and with the agreed goals. The issue log is used to capture this information.

While the cause of the problem is often obvious, it is always a good idea to probe for deeper, systemic causes that could lead to further delays. Asking “why?” five times in order to permanently and irrevocably fix a problem doesn’t take very long compared to the total delays that a project can experience.

Risk Register

The hard part of a risk register is the risk profile. Different people respond differently to risk, and some are more comfortable with thinking about uncertain outcomes than others. These differences between people lead to a lot of variation and debate in identifying risks; a good strategy for making risk registers easy is to standardize. The best practices are to focus on the causes of the risk and the probable impacts and to standardize the process.

There has been a lot written about risk management. Some of the best, in my opinion, is the work by De Meyer, Loch and Pich, which was first brought to my attention by Glenn Alleman over at the Herding Cats blog. In their excellent book, Managing the Unknown: A New Approach to Managing High Uncertainty and Risk in Projects, they break down risk into two major components: relationship complexity and task complexity.

When the relationships of stakeholders or partners are complex—groups aren’t aligned—then you can expect disagreements and conflict. Successful strategies for dealing with relationship complexity include increased communication and more rigidly defined relationships.

When tasks are complex—there are many links between tasks, so that changing one task can affect many, or there is a high degree of uncertainty in what needs to be done—then the successful strategies range from critical path management to an entrepreneurial approach of working multiple solutions in parallel (see also De Meyer 2001).

By implementing these pairings of source of risk with management strategy in a risk register template, we can greatly simplify the process and drive more consistent risk management results. Adding in a simple analysis of the impact can help us with prioritization (where do we spend our resources monitoring) and monitoring frequency.

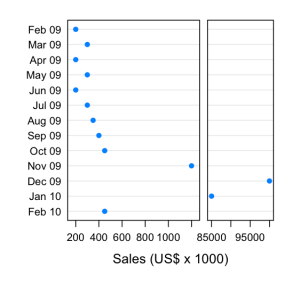

Monitoring is all about how you will know when to do something about the risk. i.e. You want to decide in advance what condition will trigger you to transition this risk to the issue log. Measures should be relevant to the risk, quantitative where possible and the method of measurement should be clearly defined (you don’t want people disagreeing over the project plan just because they measure something differently). Set up measurement intervals that make sense by asking yourself how long you can go without knowing that you have a problem. Plot the results as a time series or on a control chart to allow you to distinguish between normal variation in the measurement and a condition that requires action.

References

- Loch, Christoph H, Arnoud De Meyer, and Michael T Pich. Managing the Unknown. Hoboken, New Jersey: John Wiley & Sons, 2006. Print.

- De Meyer, Arnoud, Christoph H Loch, and Michael T Pich. “Uncertainty and Project Management: Beyond the Critical Path Mentality.” 2001 : 1–23. Print.

You must be logged in to post a comment.